Blogs

Behind the Interface: AI’s Hidden Influence on UX

Shruti Mankar

Posted On May 5, 2025

AI has been constant in design workflow lately. It helps with layout suggestions, quick content drafts, and even full user flows. But there is something that doesn’t sit right.

Some of the AI suggestions look good on the surface. But dig a little deeper, and they are actually deceptive. They push users to click something they didn’t mean to, sign up without realizing, or feel rushed into a choice.

This isn’t always intentional. But it’s happening.

What is Deceptive Design?

Deceptive design (also called “dark patterns”) is when a website or app is designed in a way that tricks you into doing something you didn’t fully agree to. Like:

- Adding extra items to your cart automatically.

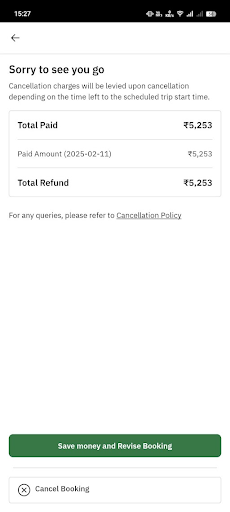

- Making it hard to cancel a subscription

- Hiding real costs until the last step

- Using deceptive language to confuse users to make wrong decisions

Now, AI is starting to learn and repeat them without knowing these patterns are harmful.

How AI Learns to Deceive

AI tools like ChatGPT or code generators don’t know right from wrong. They learn from patterns they see in websites, apps, and interfaces online.

A lot of current designs already include deceptive elements so the AI copies them.

Some examples:

Fake urgency:

A study from 2025 (Krauß et al.) asked people to use ChatGPT to build an online store. It added countdown timers, limited stock messages, and “Only 2 left!” alerts without being told to. These tricks create fake urgency and convey false information and pressure users to act fast.

Tricky UX components

Another study (Chen et al., 2024) found that 1 in 3 AI-generated UI elements had dark patterns. These included:

- Hidden costs

- Confusing buttons

- Auto-renewing subscriptions

Immersive environments, deeper manipulation

The Future of Privacy Forum showed how Ai in VR/AR spaces can use biometric data (like eye movement, heart rate, or facial reaction) to create personalised pressure points.

These could be used to target user’s weaknesses like exploiting their insecurities.

AI isn’t trying to trick people it just copies what it sees, and a lot of what it sees is already unethical.

Can AI Help Fix This Too?

Surprisingly, yes. Some researchers are now using AI to spot and fight deceptive design.

Here’s how:

DPGuard

An AI system that looks at website screenshots and identifies the more than 50 types of deceptive patterns, automatically.

Browser tools

Extensions can warn users when a site is using deceptive patterns to manipulate their actions, helping them stay informed.

Support for law and guidelines

Regulators are starting to use AI scan apps and websites faster, helping them enforce transparency and protect users.

What Designers Can Do

AI is becoming a powerful co-designer, but with that power comes quiet risks. When deceptive patterns slip into our work—intentionally or not—they erode user trust and long-term credibility. These aren’t always loud, visible problems. Often, they’re small nudges, misleading defaults, or patterns that look like good UX but aren’t.

As designers, we must look beyond AI’s output and understand the motivations it reflects. Our responsibility isn’t just to create usable interfaces—it’s to create honest ones.

Designers can:

- Audit AI outputs with a critical eye, especially when it comes to conversion tricks or frictionless flows.

- Write prompts that include ethical guardrails like “transparent,” “user-consent driven,” or “no urgency tactics.”

- Create internal pattern libraries that avoid dark patterns, so AI tools trained on your work reinforce the right examples.

- Push for review loops—design, test, reflect—especially when AI-generated content is involved.

- Be advocates for slow, intentional design when needed, rather than chasing only quick wins.

The future of AI in UX is still being shaped. Let’s make sure it’s built on clarity, consent, and care.